Describe Anything AI

Advanced Region-Based Visual Understanding

Describe Anything AI transforms how we interact with visual content by generating rich, detailed descriptions of specific regions in images and videos. Simply select any area using clicks, scribbles, or masks to receive contextual insights about exactly what you want to understand.

Describe Anything AI Overview

Describe Anything AI (DAM) is a revolutionary AI system designed to generate detailed, contextually aware descriptions of specific regions within images and videos. Powered by advanced machine learning, Describe Anything AI sets new standards in visual understanding.

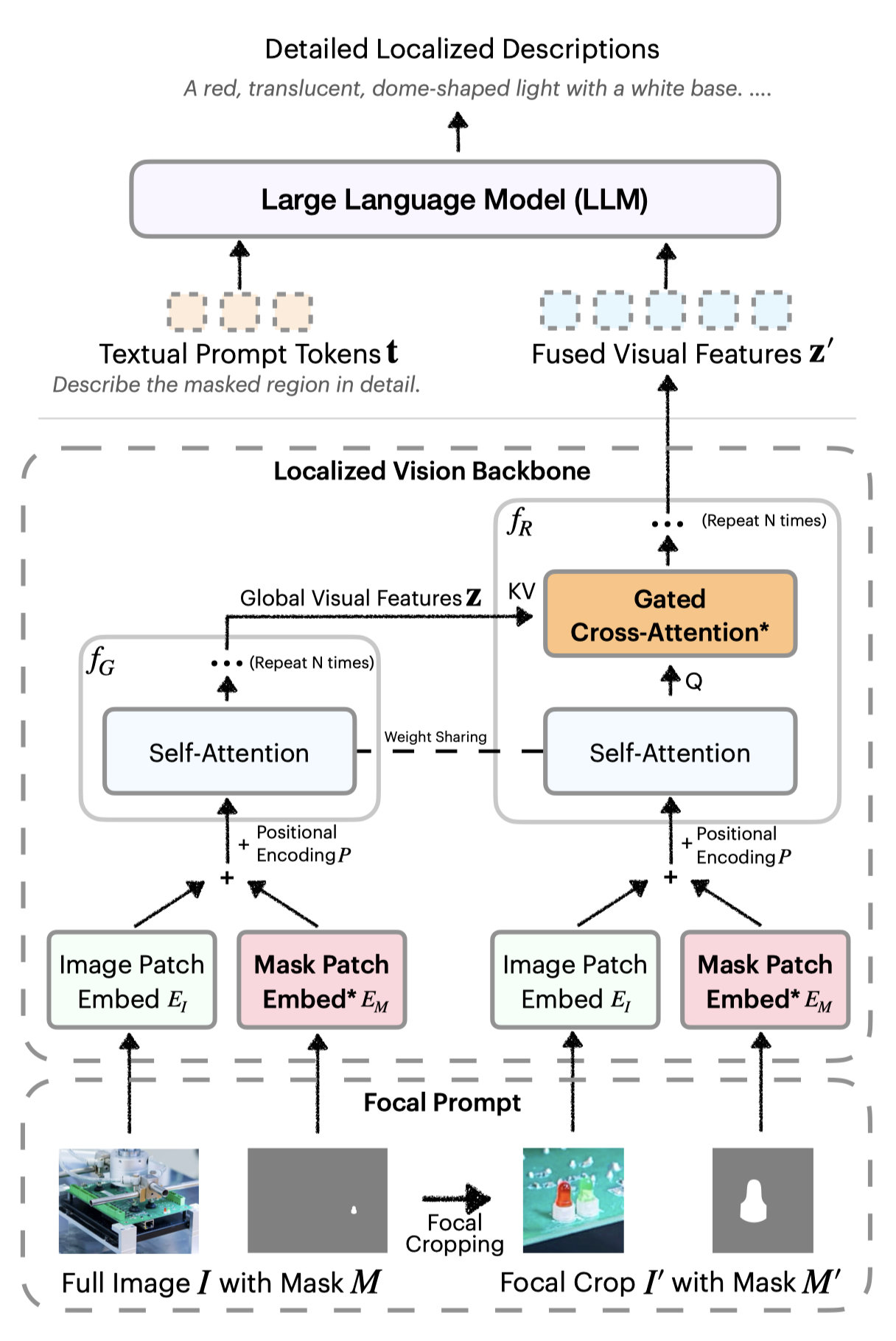

Focal Prompt

Preserves fine-grained details while maintaining broader context. This dual-view approach enables the model to capture intricate details of the selected region without losing its relationship to the surrounding environment.

Localized Vision Backbone

Ensures accurate understanding of the specified region within its broader surroundings through specially designed attention mechanisms that integrate local features with global context.

Multi-granular Descriptions

Generates descriptions at various levels of detail, from concise phrases to comprehensive multi-sentence narratives, adapting to user needs and preferences.

Video Understanding

Tracks objects through complex motion, occlusion, and camera movement, providing consistent and accurate descriptions across video frames.

Key Features of Describe Anything AI

The innovative components that power the Describe Anything Model's capability to generate detailed localized descriptions

Focal Prompt

Describe Anything AI encodes the region of interest with high token density while preserving context. Includes both the full image and a focal crop with their corresponding masks, providing both detailed view and contextual information.

Technical Details:

- Extracts bounding box of masked region and expands it to include surrounding context

- Creates a focal crop of the image and mask for detailed representation

- Provides higher resolution encoding for small objects within complex scenes

- Ensures minimal 48-pixel dimensions for very small regions

Localized Vision Backbone

Processes both the full image and focal crop, integrating precise localization with broader context through specialized encoding and cross-attention mechanisms.

Technical Details:

- Encodes masks in a spatially aligned manner with the image

- Uses cross-attention to integrate global context into regional features

- Shares self-attention blocks between global and regional vision encoders

- Maintains computational efficiency without increasing sequence length

Semi-Supervised Data Pipeline

A two-stage approach to generate high-quality training data by leveraging existing annotations and unlabeled web images for diverse localized descriptions.

Technical Details:

- Reframes captioning as a keyword expansion task using high-quality masks

- Employs self-training on web images without relying on class labels at inference

- Uses confidence-based filtering to ensure high data quality

- Enables multi-granular captioning through LLM summarization

Versatile Region Specification

Accepts various forms of user input for region specification, making the model highly flexible and user-friendly for different applications.

Technical Details:

- Supports clicks, scribbles, boxes, and masks as input formats

- Converts different input types into masks using SAM and SAM 2

- For videos, requires region specification in only one frame

- Maintains accurate tracking across frames with occlusion and motion

Native Video Capabilities

Extends seamlessly to videos by processing sequences of frames and their corresponding masks to generate coherent temporal descriptions.

Technical Details:

- Processes sequences of frames with corresponding masks

- Concatenates visual features along the sequence dimension

- Describes motion patterns and changes over time

- Handles challenging conditions like occlusion and camera movement

Zero-Shot Capabilities

Demonstrates emergent abilities in tasks it wasn't explicitly trained for, extending its utility beyond its primary training objectives.

Technical Details:

- Answers region-specific questions without explicit QA training

- Integrates information from multiple views for 3D object understanding

- Provides multi-granular descriptions with different prompts

- Identifies properties like colors, materials, and patterns on demand

State-of-the-Art Performance

Achieves superior results across 7 benchmarks spanning different granularities of regional captioning for both images and videos.

Technical Details:

- Outperforms previous best on LVIS and PACO for keyword-level captioning

- Achieves 12.3% improvement on Flickr30k Entities for phrase-level captioning

- Excels at detailed captioning on Ref-L4 with 33.4% improvement

- Surpasses closed-source models like GPT-4o on DLC-Bench

Implementation Efficiency

Despite its advanced capabilities, DAM maintains computational efficiency. The model uses parameter sharing between vision encoders and initializes new components to zero to preserve pre-training capabilities. This design allows DAM to achieve state-of-the-art performance with only ~1.5M training samples, significantly less than models requiring full pretraining.

How Describe Anything AI Works

Describe Anything AI uses innovative technology to generate detailed descriptions of specific regions in images and videos, setting new benchmarks in AI-powered visual analysis

Input Selection

Users specify regions of interest using clicks, scribbles, boxes, or masks. For videos, specifying the region in any frame is sufficient for tracking across the sequence.

Focal Processing

The model processes both the full image and a focal crop of the region with its "focal prompt" technology, preserving fine details while maintaining contextual awareness.

Description Generation

The localized vision backbone integrates region-specific information with global context to generate detailed, accurate descriptions at your preferred level of detail.

Technical Architecture

Focal Prompt

Ensures high-resolution encoding of targeted regions while preserving context from the full image

Localized Vision Backbone

Integrates precise localization with broader context through gated cross-attention mechanisms

Large Language Model

Translates visual features into detailed natural language descriptions of the specified region

Applications of Describe Anything AI

Describe Anything AI for Detailed Localized Image Captioning

Describe Anything AI generates detailed and accurate descriptions of specific regions within images, preserving both fine-grained details and global context.

Example Output:

A red, translucent, dome-shaped light with a white base and subtle reflections. The fixture has a smooth surface with a gentle curvature.

Describe Anything AI for Video Analysis

Describe Anything AI describes user-specified objects in videos, even under challenging conditions such as motion, occlusion, and camera movements.

Example Output:

A person wearing a black shirt and dark shorts is captured in a dynamic sequence of movement. The individual appears to be in a running motion, with their body slightly leaning forward.

Multi-Granular Descriptions

Control the amount of detail and length of descriptions with different prompts, from brief summaries to comprehensive analyses.

Example Output:

Brief: "A modern chair with curved backrest and light wood legs." Detailed: "A modern chair with a curved backrest and textured, light brown fabric upholstery. The chair features a smooth, rounded top edge and slightly tapered back..."

Zero-shot 3D Object Captioning

Describe objects in multi-view datasets by integrating information from multiple frames to provide coherent descriptions of 3D objects.

Example Output:

A blue SUV with a boxy design, featuring a spare tire mounted on the rear door. It has a four-door configuration with black side mirrors and tinted windows.

Zero-shot QA Capabilities

Answer questions about specific regions in images, identifying properties like colors, materials, and patterns.

Example Output:

Q: "What is the main color of the clothing in the masked area?" A: "The main color of the clothing in the image is blue."

Frequently Asked Questions about Describe Anything AI

What makes Describe Anything AI different from other vision-language models?

Unlike general Vision-Language Models (VLMs), Describe Anything AI is specifically designed for detailed localized captioning. It uses a focal prompt and localized vision backbone to balance local detail with global context, allowing it to generate precise descriptions of specific regions while maintaining contextual understanding.

How does Describe Anything AI handle different types of user inputs for region specification?

Describe Anything AI accepts various forms of region specification, including clicks, scribbles, boxes, and masks. For videos, users only need to specify the region in a single frame. The model uses Segment Anything Model (SAM) to convert these inputs into masks that it can process.

What is a 'focal prompt' in Describe Anything AI?

The focal prompt is a key innovation in Describe Anything AI that includes both the full image and a focused crop centered around the specified area, along with their corresponding masks. This approach provides high token density for detailed representation of the region while preserving the broader context.

How does Describe Anything AI perform compared to existing approaches?

Describe Anything AI achieves state-of-the-art performance across 7 benchmarks in keyword-level, phrase-level, and detailed multi-sentence captioning for both images and videos. It outperforms previous best models significantly, particularly in challenging scenarios like describing small objects in complex scenes.

What data was used to train Describe Anything AI?

Describe Anything AI uses a Semi-supervised learning (SSL)-based Data Pipeline (DLC-SDP) that leverages high-quality segmentation datasets and unlabeled web images. This approach combines human-annotated masks and keywords with self-training on web-scale data, enabling scalable and diverse data curation.

Can Describe Anything AI work with small or partially visible objects?

Yes, Describe Anything AI is particularly effective at describing small objects or partially visible objects in complex scenes. The focal prompt and localized vision backbone allow the model to capture fine-grained details that might be lost in traditional approaches.

How does Describe Anything AI handle video captioning?

For videos, Describe Anything AI processes sequences of frames and their corresponding masks. The visual features from all frames are concatenated and fed into the language model to generate detailed localized descriptions. The model can effectively track and describe objects under challenging conditions like motion and occlusion.